Testing

Running Tests

As you develop content within a project repository, it’s generally good practice to do test-driven development. Test creation will be covered in later sections, but for running tests, you can use two different commands:

~$ make test

This command is the first option, or you can directly envoke pytest for running the tests.

~$ pytest

Since pytest is used for running the test suite, you can use it to run specific tests or test classes in your repository. For example:

~$ # A single test file

~$ pytest tests/test_qc_workflow.py

~$ # All tests in a class

~$ pytest tests/test_qc_workflow.py::TestQCWorkflow

~$ # A specific test method in a class

~$ pytest tests/test_qc_workflow.py::TestQCWorkflow::test_check_qc_values

In addition to running tests locally, you can also run tests on a different server. To specify a different L7|ESP instance on the command line, use the --host and --port options:

~$ pytest --host test.l7informatics.com --port 8005

Note

For running tests on a public URL, you may need to connect to the application via SSL. To do so, you’ll need to use the --ssl option and set your port to 443. For example:

~$ # connecting to https://test.l7informatics.com

~$ pytest --host test.l7informatics.com --port 443 --ssl

Here are other options useful during test execution:

~$ pytest -h

usage: pytest [options] [file_or_dir] [file_or_dir] [...]

positional arguments:

file_or_dir

...

custom options:

-N, --no-import Skip IMPORT definitions when running tests.

-C, --teardown Teardown content after running tests.

-P, --port Port for accessing L7|ESP.

-S, --ssl Use SSL when connecting to L7|ESP.

-H, --host Host for accessing L7|ESP.

-U, --email Email for admin user.

-X, --password Password for admin user.

Creating Tests

There are generally two types of tests you can write to verify functionality as you develop content within L7|ESP:

Functional Tests - Simple tests that can be run outside of the context of testing Workflows and related content. Examples of this type of testing include testing connections, integration points, server-side extensions, etc.

Content Tests - Tests driven by configuration that test the functionality of Workflows and other types of content. These types of tests are generally written using classes and L7|ESP SDK tools for automating much of the content testing.

Functional Tests

As a gentle introduction, let’s write a simple test to verify that L7|ESP is connected and accepting requests. We’ll need to create a test file in the tests directory named test_status.py. The contents of that file should look something like:

# -*- coding: utf-8 -*-

import pytest

def test_status():

import esp

running = esp.status()

assert running, 'Could not connect to L7|ESP via python client!'

After creating tests, you can run them using pytest (this example uses the test defined above):

~$ pytest tests/test_status.py b

==================================== test session starts ====================================

platform darwin -- Python 3.7.1, pytest-3.10.0, py-1.7.0, pluggy-0.8.0 -- /usr/local/opt/python/bin/python3.7

cachedir: .pytest_cache

collected 1 item

tests/test_status.py::test_status Connection established!

PASSED

================================= 1 passed in 0.68 seconds ==================================

If L7|ESP was not running and the tests failed, you’d see the following:

~$ pytest tests/test_status.py b

==================================== test session starts ====================================

platform darwin -- Python 3.7.1, pytest-3.10.0, py-1.7.0, pluggy-0.8.0 -- /usr/local/opt/python/bin/python3.7

cachedir: .pytest_cache

collected 1 item

tests/test_status.py::test_status FAILED

================================= 1 failed in 0.68 seconds ==================================

Now that we’ve created a test for some specific functionality in the application, let’s talk about how to create tests for custom content defined in the L7|ESP SDK.

Content Tests

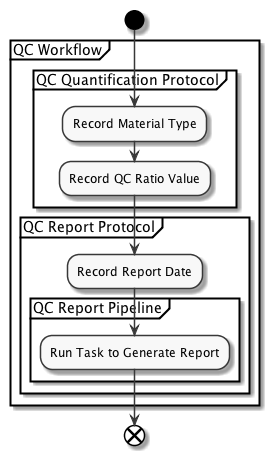

Along with functional testing, we can leverage L7|ESP SDK tools to help integration testing for content defined in the L7|ESP SDK. By now, you’re familiar with the config-style format for defining content, so let’s talk about defining tests to verify the functionality of a simple QC Workflow. For this example, our Workflow will have two parts:

A Protocol to capture QC metadata about a Sample.

A Protocol to generate a Report using a Pipeline.

Here’s a schematic detailing the components of that Workflow at a high level:

Now that we know what type of content we’re going to create, let’s make some content files. First, we need to create our Workflow:

# Contents of: content/workflows/QC-Workflow.yml

QC Measurement Workflow:

desc: Workflow to measure QC values and generate a QC report.

tags:

- quickstart

- qc

protocols:

- QC Quantification:

protocol: standard

variables:

- Type:

rule: dropdown

dropdown:

- 'DNA'

- 'RNA'

- Ratio:

rule: numeric

- QC Report:

protocol: pipeline

pipeline: QC Report

variables:

- material:

rule: text

value: "{{ column_value('Type', 'QC Quantification') }}"

visible: false

- ratio:

rule: numeric

value: "{{ column_value('Ratio', 'QC Quantification') }}"

visible: false

- Report Date:

rule: date

And we also need to create the Pipeline that our Workflow references:

# Contents of: content/pipelines/QC-Report.yml

QC Report:

report:

name: Result Report

elements:

- - depends:

- {file: Result Report, tasknumber: 1}

type: raw_file

- []

tasks:

- Generate QC Report:

desc: Task to generate QC report from workflow metadata

cmd: |+

# Simple Task that determines if the specified 'ratio' is in the proper range

RNA_MIN=1.8

RNA_MAX=2.1

DNA_MIN=1.7

DNA_MAX=2.0

TYPE="{{ material }}"

RATIO="{{ ratio }}"

if [ $TYPE = "RNA" ]; then

PASS=`echo "$RNA_MIN <= $RATIO && $RATIO <= $RNA_MAX" | bc`;

elif [ $TYPE = 'DNA' ]; then

PASS=`echo "$DNA_MIN <= $RATIO && $RATIO <= $DNA_MAX" | bc`;

fi

echo $PASS

if [[ $PASS = 1 ]]; then

echo "<b>Your sample <font color='green'>Passed</font> and contains pure $TYPE</b>" >> result.html

else

echo "<b>Your sample <font color='red'>Failed</font> and is NOT pure $TYPE</b>" >> result.html

fi

files:

- Result Report:

file_type: html

filename_template: "{{ 'result.html' }}"

Now that we have our content defined, we can use L7|ESP SDK tools to write a simple test that will import these files and verify that they exist in the system. This test also enables us to verify that there are no syntax errors in our Workflow definitions. Our test file for doing so can look like the following, tests/test_qc_workflow.py:

# -*- coding: utf-8 -*-

# imports

import os

import unittest

from . import CONFIG, RESOURCES, CONTENT

from esp.testing import ModelLoaderMixin

# tests

class TestQCWorkflows(ModelLoaderMixin, unittest.TestCase):

IMPORT = dict(

Workflow=[

os.path.join(CONTENT, 'workflows', 'QC-Workflow.yml'),

],

Pipeline=[

os.path.join(CONTENT, 'pipelines', 'QC-Report-Pipeline.yml'),

]

)

Running this test will result in the following output:

~$ pytest tests/test_qc_workflow.py

================================== test session starts ===================================

platform darwin -- Python 3.7.1, pytest-4.1.0, py-1.7.0, pluggy-0.8.0 -- /usr/local/opt/python/bin/python3.7

cachedir: .pytest_cache

collected 1 item

tests/test_qc_workflow.py::TestQCWorkflows::test__content

INFO:root:Clearing existing content from database.

INFO:root:Creating Task: Generate QC Report

INFO:root:Creating Pipeline: QC Report Pipeline

INFO:root:Creating PipelineReport: QC Report

INFO:root:Creating Protocol: QC Quantification

INFO:root:Creating Protocol: QC Report

INFO:root:Creating Workflow: QC Workflow

Successfully imported config data.

PASSED

================================ 1 passed in 3.47 seconds ================================

Along with simply testing Workflow definitions, we can also test that the Workflow works properly when used in an Experiment. To do this test, we can use the same format as above, but add a DATA attribute for what Projects/Experiments need to be created. For this example, we’ll need to create a Project and two Experiments for testing out the Workflow. Here are config files for our two Experiments:

# Contents of tests/resources/QC-Test-1.yml

My QC Experiment 1:

submit: True

project: QC Project

workflow: QC Workflow

samples:

- ESP001

- ESP002

protocols:

- QC Quantification:

data:

ESP001:

Type: DNA

Ratio: 1.8

ESP002:

Type: RNA

Ratio: 1.9

# Contents of tests/resources/QC-Test-2.yml

My QC Experiment 2:

submit: True

project: QC Project

workflow: QC Workflow

samples:

- ESP003

- ESP004

protocols:

- QC Quantification:

data:

ESP003:

Type: DNA

Ratio: 1.7

ESP004:

Type: RNA

Ratio: 2.0

After creating these two configs, we can update our tests to run them after Workflows are created. Here is an updated version of our original test file:

# -*- coding: utf-8 -*-

# imports

import os

import unittest

from . import CONFIG, RESOURCES, CONTENT

from esp.testing import ModelLoaderMixin

# tests

class TestQCWorkflows(ModelLoaderMixin, unittest.TestCase):

IMPORT = dict(

Workflow=[

os.path.join(CONTENT, 'workflows', 'QC-Workflow.yml'),

],

Pipeline=[

os.path.join(CONTENT, 'pipelines', 'QC-Report-Pipeline.yml'),

]

)

DATA = dict(

Project=[

{'name': 'QC Project'}

],

Experiment=[

os.path.join(RESOURCES, 'QC-Test-1.yml'),

os.path.join(RESOURCES, 'QC-Test-2.yml'),

]

)

Let’s go over the components of this test class in more detail:

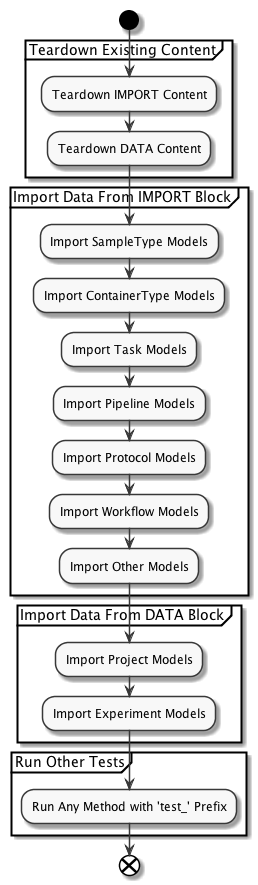

ModelLoaderMixin- A test mixin used for managing content imports and setup/teardown during testing. For testing out content in this way, you’ll want to include bothesp.testing.ModelLoaderMixinandunittest.TestCasein your tests.IMPORT- A class variable that is a dictionary of mappings from model type to models that should be imported as a part of the tests. For information on the types of models you can create configuration for, see documentation for the Python client.DATA- A class variable that is similar toIMPORT, but is reserved specifically for creating Experiments and Projects for testing out content.

Under the hood, any content defined within the IMPORT and DATA blocks of the tests will be using the Python client to create the content by calling Model.create() for a specific model. For example, if your tests look like:

class TestQCWorkflows(ModelLoaderMixin, unittest.TestCase): IMPORT = dict( SampleType=[ os.path.join(RESOURCES, 'inventory', 'Sample-Types.yml'), { 'name': 'My Sample Type', 'desc': 'Description for My Sample Type' } ] )

The configuration will be created with this equivalent code:

from esp.models import SampleType

SampleType.create(

os.path.join(RESOURCES, 'inventory', 'Sample-Types.yml')

)

SampleType.create(

name='My Sample Type',

desc='Description for My Sample Type'

)

For additional context, the following flow diagram describes testing steps using the format described above (i.e. any test using a ModelLoaderMixin):

Using Configuration

In addition to explicitly defining what configuration you want to include in your testing, you can also import default configuration, specified in seed files within the repository (see the Content section for more information on those seed files).

To import a seed file in your testing, you can update your test class to look like the following (keeping with the example used above):

class TestQCWorkflows(ModelLoaderMixin, unittest.TestCase):

SEED = os.path.join(ROLES, 'demos', 'content.yml')

DATA = dict(

Project=[

{'name': 'QC Project'}

],

Experiment=[

os.path.join(RESOURCES, 'QC001.yml'),

os.path.join(RESOURCES, 'QC002.yml'),

]

)